“What the GPU offers for audio is an almost unbound level of processing”: We investigate the claim that harnessing GPU can unlock limitless music production potential

The key to more powerful plugins may be the graphics processor that you already have in your computer. We discover how three developers are making this happen right now.

Want all the hottest music and gear news, reviews, deals, features and more, direct to your inbox? Sign up here.

You are now subscribed

Your newsletter sign-up was successful

Today's plugin instruments require a lot of processing power. Whether that be the latest analog modeling synthesizer with a sound that’s comparable to hardware, acoustic physical modeling for ultra-realistic violins without samples, or experimental instruments recreating physics and other state-of-the-art processes, your bog-standard CPU is getting increasingly more taxed by all of the heavy lifting. Now add that instrument to a DAW session with other synths and effects, and your poor computer is ready to throw in the towel.

Enter the GPU. The Graphics Processor Unit may have started as a dedicated pixel cruncher but, thanks to its unique structure, it’s now being pressed into all kinds of new duties, including artificial intelligence, neural networks, and lately, audio.

Because of this largely underused chip, powerful new processes are now becoming available to audio plugin developers willing to think outside the box.

We spoke with three of them - GPU Audio, Anukari and sonicLAB - to find out how they’re harnessing the GPU to create software instruments of astonishing realism, scope and power.

The GPU has traditionally done the work of rendering a computer’s graphics, and it does this very well. But lately, some very clever developers have been using it to process audio as well. Can it really make that much of a difference, though? We asked Chris D, the Head of Pro Audio Partnerships at GPU Audio, a leader in the field, about this.

“If you’re happy with one soft synth and a delay, there is probably not much call to get your GPU involved in audio processing,” Chris says. “What the GPU offers for audio processing is an almost unbound level of processing.”

The key to this, he stresses, is the GPU’s ability to process in parallel. It can handle multiple processes at any one time, unlike conventional DSP audio, which is sequential and therefore limited. “Think of the processes like cars on a motorway,” he explains. “Our CPU has one lane for traffic to flow; the GPU has thousands of lanes.”

Want all the hottest music and gear news, reviews, deals, features and more, direct to your inbox? Sign up here.

Evan Mezeske of boutique developer Anukari points out that GPUs, such as the ones in Apple’s M-series chips of from company NVIDIA, remain largely unused when we work with audio.

That idle GPU could be tasked to push the limits of how many plugins or tracks we can run simultaneously, or for single plugins that require a lot of computational power. Evan’s instrument, also called Anukari, falls into the second category.

“I personally find category two more interesting,” he enthuses, “because it raises the question of what kind of plugins simply couldn't exist without this kind of processing power? I'm interested in new kinds of audio processing that haven't been thoroughly explored before because they were previously computationally infeasible.”

Markus Steinberger, GPU Audio’s R&D Scientist, sees this new evolution of computation as part of the ‘evolving spirit’ of Moore’s Law, an observation made by Intel co-founder Gordon Moore in 1965 that posits that the number of transistors in an integrated circuit doubles - and so gets more powerful - every two years.

Now, rather than relying just on more transistors, we can take advantage of smarter architecture and workload distribution. “By offloading demanding audio tasks to the GPU,” Markus says, “GPU Audio unlocks high-throughput, real-time processing that significantly outperforms conventional CPU-bound workflows.”

Evan at Anukari puts it this way: “Insofar as audio processing can translate transistor count into ‘audio goodness,’ this just means that to take full advantage of what modern processors have to offer, the GPU is becoming increasingly attractive. But it's not always so easy to turn more transistors into audio goodness.”

But isn’t this difficult to do? “Yes and no,” answers Evan when asked if it’s difficult to get the GPU to process audio. “The GPU is very, very different from the CPU, and to take full advantage of its power requires a different approach to how a plugin's DSP code is written.”

“Graphics cards were not designed to process audio,” points out Chris at GPU Audio, which has put in about 10 years of R&D on its tech. “They are designed to continually pump out an insane amount of pixels across your computer screen. When you’re not playing games, or rendering complex images, your GPU does next to nothing. Imagine what could be done with all of the unused resources.”

Sinan Bokesoy, the founder of software company sonicLAB and parent company sonicPlanet, explains how he uses the GPU to calculate scene data in some of his applications, which is then used in audio calculations.

“Instead of calculating this data on the CPU alongside the audio waveform processing,” he says “I use the parallel processing structure of the GPU, which is very efficient for such scenic calculations. GPUs can work together with CPUs to calculate data that feeds audio algorithms efficiently and without low-level programming efforts.”

Ultimately, says Evan, “it's a question of creativity in terms of how to utilize the strengths of GPUs in an interesting way.”

Although gamers will try to get the best GPUs that they can, the good new for music producers is you don’t need a special GPU to process audio. The one you already have in your computer is likely good enough, such as an NVIDIA chip or the ones built into Apple’s M-series chips.

“Normal GPUs are fine,” explains Evan. “Generally, what you get when you pay more is a GPU that is a tiny bit faster, but is massively more parallel. But any modern GPU is pretty much a marvel of technology. All of the Apple silicon GPUs are incredible, and anything from the last few years from NVIDIA is great.”

GPU Audio recommends a minimum spec of NVIDIA Series 10 upwards on Windows, or M1+ for Apple. “NVIDIA released its series 10 GPUs in 2014 and the M1 came out in 2020,” says Chris, “so we’re not talking about the very latest hardware. Our aim is for as many people as possible to experience what the GPU can do for audio.”

Looking at diagrams of Apple’s M chips, especially the Pro and Max models, a significant amount of the IC is devoted to the GPU. That’s a resource just waiting to be exploited. “The Apple M chips have a dedicated GPU which we are harnessing for DSP on Mac OS,” Chris comments. “These parallel processing juggernauts are redundant when you’re making music. It seems crazy not to use the GPU!”

GPU Audio doesn’t make instruments itself; it partners with other developers, allowing them to use its technology to offload processing to the GPU. One of its partnerships is with Audio Modeling and its SWAM line of physical-modeled orchestral instruments. These include SWAM-B for brass instruments, SWAM-W for woodwinds, and SWAM-S for strings, plugins that offer a high degree or real-time realism that doesn’t rely on samples.

“A major advantage of the GPU in this context is its extremely high memory bandwidth,” says Markus. “While incoming audio typically arrives at 44.1 to 96 kHz, computing the effect of a room on that signal can require accessing trillions of contributing samples per second.” This can lead to bottlenecks; GPU to the rescue. “This makes GPU Audio a natural fit for high-fidelity, real-time room modeling and immersive spatial audio experiences.”

You can also access the company’s tech with the Vienna Power House add-on for VSL’s Vienna MIR Pro 3D and Vienna Synchron Player. This add-on offloads convolution processes to your graphics card, “which is otherwise redundant in music making machines,” says Chris.

GPU Audio has also recently made its SDK (software development kit) available to third-party developers, so we’re likely to see more instruments and effects that make use of the GPU in this way soon.

Now, let’s look in more detail at a few of the specific plugins that are fuelled by parallel GPU processing. Anukari 3D Physics Synthesizer is a unique physical modeling synthesizer that lets you build your own 3D instruments with various physics components like masses and springs as well as oscillators and the usual synthesizer bits. It’s incredibly flexible and powerful, sort of like a modular synthesizer but for physical objects rather than synth modules. Something this complex requires a lot of computational power, both visually and in terms of sound.

“Anukari is doing this great big complicated physics simulation, where the entire physics world needs to be stepped forward in time for each audio sample,” explains Evan, likening it more to the kind of physics engine that you'd find in a video game or something like the analysis tools that an automaker might use to understand physical stresses in a mechanical part. “This fits very naturally into the way that a GPU works, where many calculations can be done all in parallel,” he says. “Anukari does virtually all of its audio processing on the GPU.” In terms of the audio, almost nothing happens on the CPU except commanding the GPU to do its work.

“Of course,” adds Evan, “that's really only half the story, because Anukari has these beautiful 3D graphics that depict the 3D instrument the user has constructed. The graphics also happen on the GPU, but that's not so interesting from an audio perspective.”

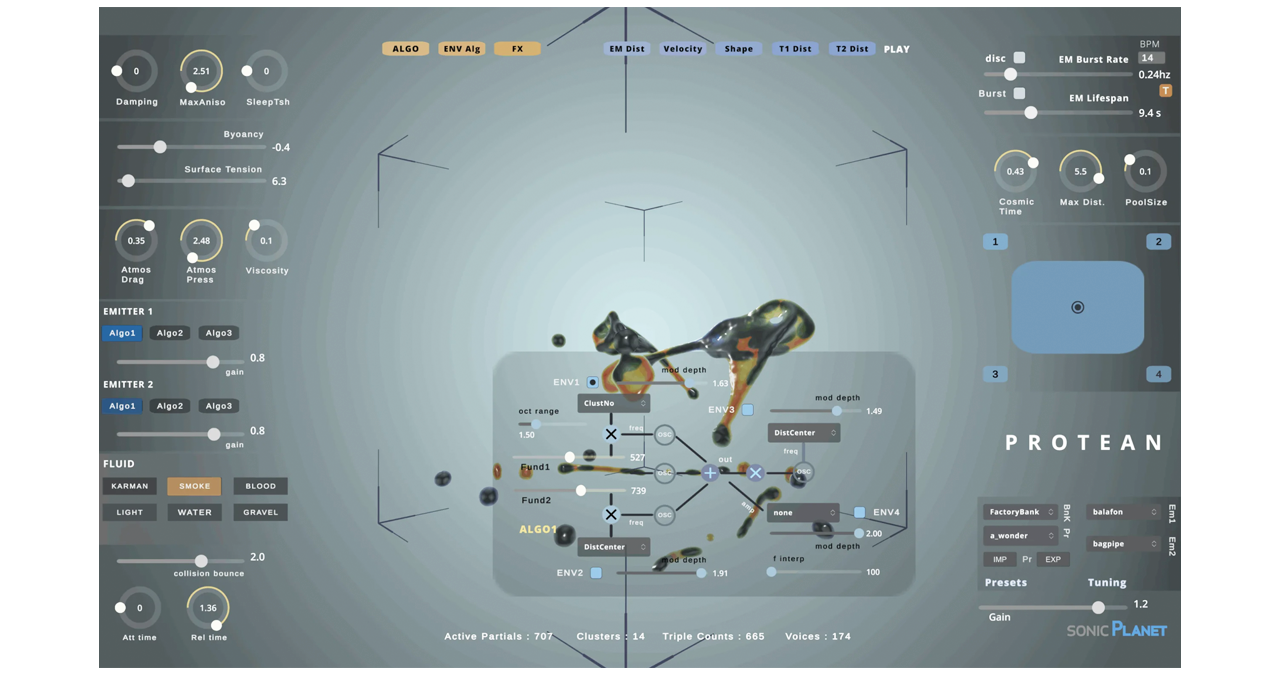

Another instrument that uses physics to help generate sound is Protean from sonicLAB. But instead of physical modeling, it uses a combination of additive and FM synthesis along with particle physics to create wild and experimental sounds, up to more than 1000 of them at a time.

“I use multi-core processing for audio algorithms in our Protean software since there are several hundred sine waves calculated simultaneously using multithreaded jobs,” says Sinan. “This is how parallel processing is implemented on CPUs using their multiple cores. A modern GPU might have thousands of cores versus a CPU's eight to 16 cores.”

In a recent article, we highlighted GPU audio processing as one way in which music production will change going forward. We asked the developers how they see this technology figuring into things.

“I would say that it's a part of the future,” agrees Evan of Anukari. “I believe there is still infinite potential with really simple stuff. But at the same time, I obviously am interested in what is possible now that really wasn't possible before.”

GPU Audio also sees it as playing a big part in tech moving forward. “If you want ultra low latencies, multi-channel spatial mixing, thousands of convolutions, and real-time machine learning then the GPU is the only way to go,” says Chris, pointing out that CPUs suffer from a physical restriction to their size and also processing capacity due to inherent thermal distribution. “GPUs on the other hand,” he notes, “are designed to be scalable, to run with multiple devices, and also to be used for cloud processing.”

Here is where things really get interesting. Cloud processing, which is something the GPU Audio team is looking into, could provide a way to not only shunt processing power over to another chip - but to another computer entirely. Says Chris: “This would be handy for example if your mobile device doesn’t have a powerful enough CPU but you still want to access incredibly high levels of processing.”

Another possibility for GPU audio processing is in hardware applications. “Imagine a mixing desk doing complex spatial processing on multiple live inputs,” says Chris. “There is scope for really advancing these existing technologies and harnessing the GPU for so many things.”

And, to take advantage of cutting-edge technologies like AI, we’re going to need the added boost of a GPU. “If we consider machine learning and live AI processing,” says Chris, “which is genuinely forging a new path for audio, these can only be processed in real-time with a GPU. So if you want a mixing desk which can de-noise your live space over multiple channels using live machine learning, it can only be achieved with a hardware-embedded GPU.”

The future just got a little brighter - and a little faster.

Adam Douglas is a writer and musician based out of Japan. He has been writing about music production off and on for more than 20 years. In his free time (of which he has little) he can usually be found shopping for deals on vintage synths.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.