“One of the concepts we are exploring is whether an artist could continue to create work after they have passed away”: The groundbreaking tech that will shape the future of music production

This isn’t sci-fi. We look at five areas where the future of music production might evolve based on very real technology

Want all the hottest music and gear news, reviews, deals, features and more, direct to your inbox? Sign up here.

You are now subscribed

Your newsletter sign-up was successful

PRODUCER WEEK 2025: Things are changing quickly in music production. It may not feel immediately like it. We still make music in largely the same way that we have for the last few decades, with computers and plugins and outboard gear.

But it’s inside the tech we already have where things are transforming - and at such a rate that many of our current workflows will look quaint and old-fashioned in even just a few years’ time.

New technologies are not evolving in a vacuum either - they’re reinforcing each other.

Many of the emerging music production tech arenas are working in tandem, a web of progress where advancements in one area bolster and spur on growth in others.

Here are five examples of technology that will change music production forever. The exciting thing is that all of these already exist in some form or another. If you can’t buy it off the shelf now, you should be able to soon.

AI…obviously

Artificial intelligence, or AI as it’s generally called now, is everywhere. From your phone to your car to your washing machine, pretty much every aspect of our lives is suddenly AI-assisted. That includes music production as well.

And, as with pretty much every other area of life, the implementation of AI in production is contentious and controversial.

Want all the hottest music and gear news, reviews, deals, features and more, direct to your inbox? Sign up here.

It feels like AI could go in one of two ways right now, either towards a golden age of music production with incredible tools and new possibilities, or to one in which humans are sidelined while AI churns out straight-to-streaming audio slop.

The safe money is on it ending up a bit of both.

“AI growth is unstoppable.” Helmuts Bems, the CEO of Sonarworks, said in a recent study on the coming impact of AI on the music industry Whether that be humans working with AI (as we do now with AI-assisted mix plugins, for example), wholly AI-generated music, or a bit of both, artificial intelligence will definitely disrupt the industry. It’s just the how and to what degree it happens that is open for debate.

As musicians and producers, we’ll never stop making music. It’s too important to us to give up no matter how much the industry changes. But rather than focus on the negative, let’s keep our heads about us and focus on the positive: that AI will - indeed, already is - creating exciting new tools for us to work and play with.

One shining example is Synthesizer V from Dreamtonics, vocal production software that lets you write and record realistic vocals, all entirely virtually. Given how fast things are moving, it probably won’t be long before we have AI synthesizers that can replicate synthesis itself.

Much like with smartphones, in a few years time we won’t be able to remember how we got along before AI. For better or for worse.

Every instrument virtually rendered

The Center for Computer Research in Music and Acoustics at Stanford University is famous for having birthed FM synthesis. However, it will probably be another one of its inventions, physical modelling, that will go on to be the crowning achievement of the CCRMA.

A synthesis technique that uses mathematical models to emulate the physical properties of acoustic sound-making devices, it first appeared in hardware synthesisers in the early 1990s.

Prohibitively expensive because of the computing power required, it got sidelined by sampling as the go-to technique for replicating realistic-sounding acoustic musical instruments in a digital space.

Now that computing power has finally caught up with the promise, physical modelling is at last emerging as a powerful alternative to sampling. While sampled instruments such as those made by Spitfire enable us to play pretty much any instrument in a fairly realistic manner, there are still restrictions, chiefly in terms of articulations (which must be recorded separately) and also in file size.

Physical modelling uses real-time processing to replicate any articulations you can imagine (as long as you have the right combination of controller and MPE), and all without the need for samples, so no more external hard drives or excess of RAM.

It’s no pie-in-the-sky idea, either, as physical modelling is already here. Arturia’s Piano V uses the technique to recreate all of the elements of a piano, from its metal strings to its wooden body, even the interaction of the vibrations of all of the elements.

Audio Modelling is another company making use of physical modelling. With its SWAM technology, composers can build up full orchestrations, complete with the interactions of the various ‘players’ in an orchestra pit. You can even place audio recordings of real musicians into the same space.

Of course, you’re not limited to copies of existing instruments. Atoms from Baby Audio imagines what interconnected masses and strings would sound like when excited. If you can imagine it - and devise an algorithm to emulate it - physical modelling can bring it to life.

The further evolution of multi-dimensional expression

One of the reasons that physical modelling can sound so realistic is because of the infinitude of articulations it’s capable of.

You need to be able to perform those articulations though, and this is where the traditional piano keyboard comes up woefully short.

Restricted to essentially note on and off positions and basic volume dynamics, it’s hard to convey the subtlety and variety of sounds that you can get out of, say, a violin or trumpet with a keyboard designed for a piano.

Enter MPE.

Short for MIDI Polyphonic Expression, MPE provides a much wider palette for expression. Not only can it handle aftertouch, pressure applied after the initial note on command to add modulation of pitch or other parameters, it can do this per-note (the ‘polyphonic’ part of the name). To really take advantage of what MPE has to offer though, you’ll need something more complex than a basic piano keyboard.

Osmose from Expressive E is that something. Although there are plenty of other controllers and instruments with non-standard shapes that offer all kinds of avenues for expression, Osmose has the advantage of being in the intuitive keyboard shape that you already know. It just adds more, letting you not only press keys but wiggle and slide them.

“The mechanics had to be naturally comfortable for keyboard players, while also enabling new expressive dimensions without breaking that familiarity,” Expressive E CEO Alexandre Bellot said when asked why Omose has been so successful. “And if Osmose has resonated with so many musicians, it’s largely thanks to our partnership with Haken Audio, with whom we co-developed the instrument.”

Indeed, the sound engine inside Osmose is nothing short of extraordinary, incorporating many kinds of synthesis - including physical modelling. The keyboard also works well with third-party plugins from companies like u-he, AAS and UVI, making it a compelling controller too.

Though the format has been around for awhile, it’s exciting to imagine where the evolution of MPE might take us next as the future rolls on. “That is really where our focus is right now,” Alexandre said. “These are incredibly exciting areas to explore, with the potential to unlock musical experiences that are both highly gratifying to play and deeply organic. They offer more nuance, realism, and new kinds of articulation and interaction that many musicians have not yet encountered.”

Super-processors

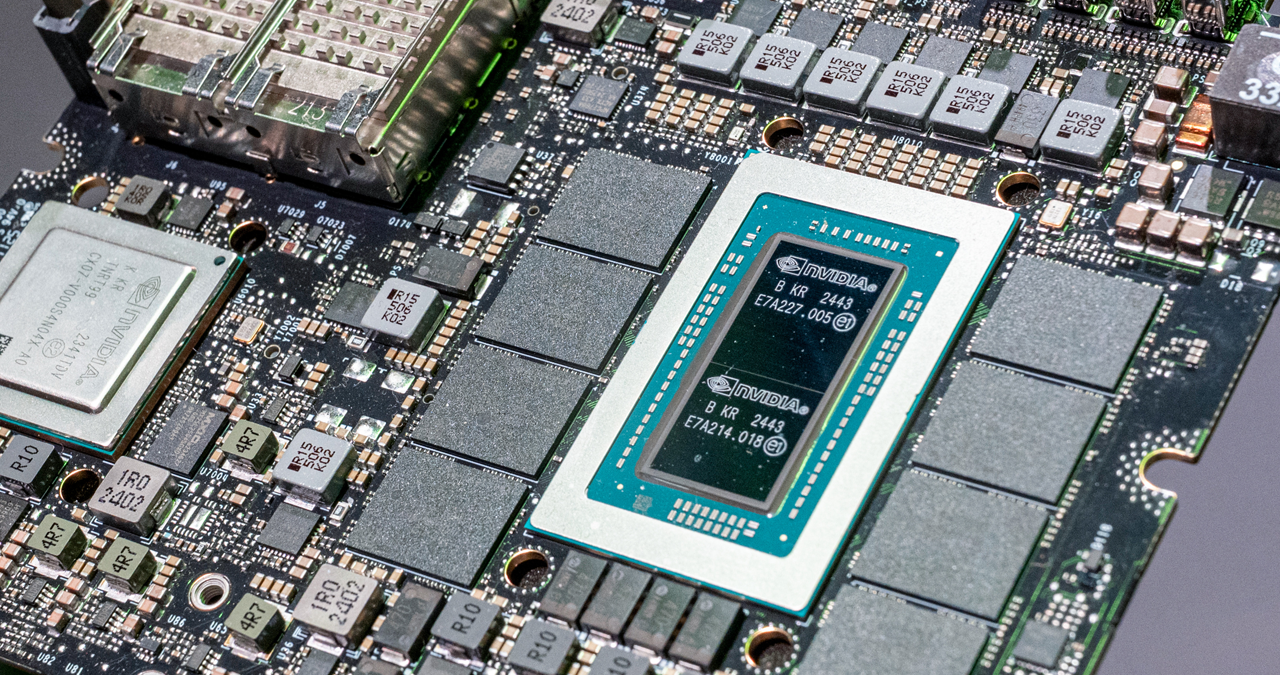

Artificial intelligence, physical modelling and other modern forms of digital technology may be impressive but they also require a lot of computing power. One way that we’ve been able to keep up with these demands is by shunting some of the processing duties over to the GPU.

Modern graphics processing units are fast enough to handle not only graphics rendering and video but the computations required for technologies like AI.

“GPUs have been called the rare Earth metals - even the gold - of artificial intelligence, because they’re foundational for today’s generative AI era,” said GPU powerhouse NVIDIA on its blog.

“GPUs perform technical calculations faster and with greater energy efficiency than CPUs. That means they deliver leading performance for AI training and inference as well as gains across a wide array of applications that use accelerated computing.”

While DAWs and plugins can’t normally access GPUs, one company, GPU Audio, has devised a way to take advantage of that parallel processing power for audio.

At a recent trade show, GPU Audio successfully ran more than 100 instances of Neural Amp Modeler, a CPU-intensive plugin that uses deep neural networks to recreate the sound of real guitar amps and cabinets. By taking advantage of the GPU, the company could achieve this normally impossible feat.

GPU Audio has technology inside partner products like Vienna Power House, which offloads convolution processes to the GPU, and Audio Modelling’s Accelerated SWAM product line.

Now that GPU Audio has made its SDK (software development kit) available for free, we should hopefully begin to see our GPUs pulling their weight more during heavy DAW sessions and reducing those annoying CPU spikes.

Defying death

What if you could continue composing and producing music even after you die? Sounds mad, right?

This may sound fantastic, like the stuff of science fiction, and yet it’s happening right now in Australia at the Art Gallery of Western Australia.

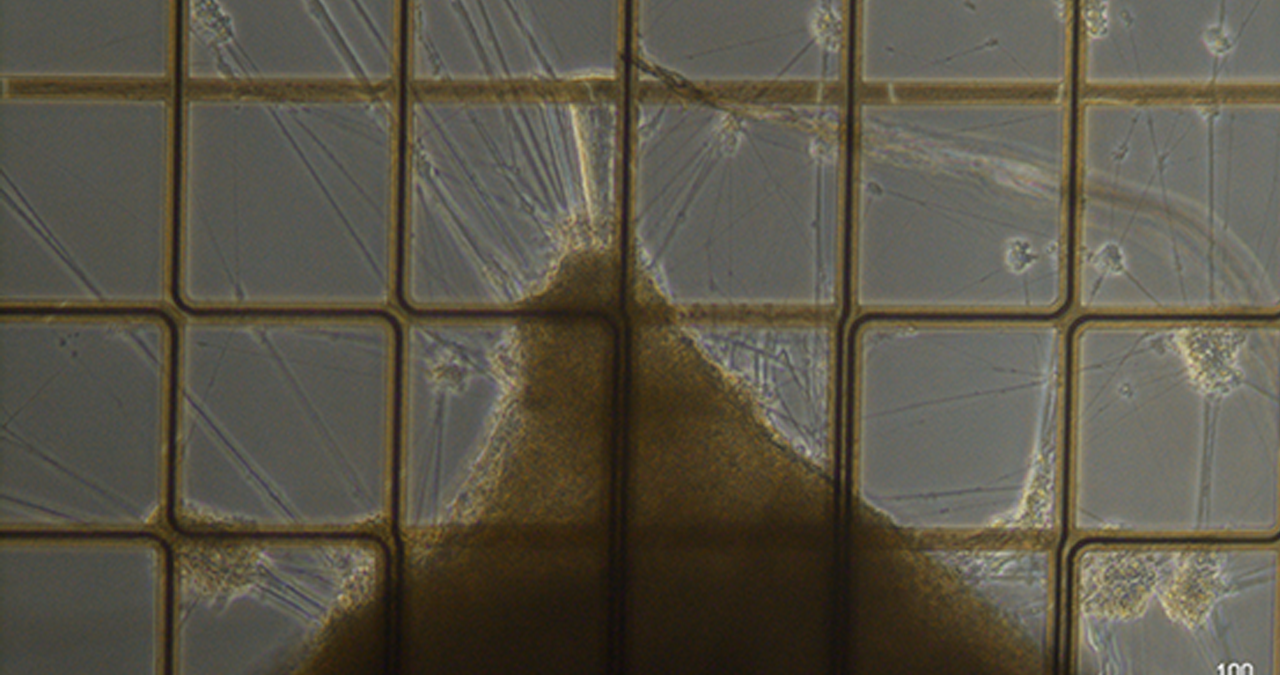

Called Revivification, it features the in-vitro (external) ‘brain’ of the late experimental composer Alvin Lucier, who died in 2021. The gallery houses the late composer’s cerebral organoids, three-dimensional structures that resemble a human brain.

“One of the concepts we are exploring with Revivification is whether an artist could continue to create work after they have passed away,” said Matt Gingold, one of the artists involved, who, along with Guy Ben-Ary, Nathan Thompson and neuroscientist Stuart Hodgetts developed the project.

“In a way we are allowing 'him' to continue creating forever.”

Lucier's biological material continues to compose and create in ways neither he - nor the artists - can fully predict.

“The mini brains are ‘embodied’ using a neural interface,” Matt continued, “a set of 64 electrodes that (they) are growing over and around.” These electrodes allow the team to read the neural activity inside the 'brain' and convert it into sound. Interestingly, the sound in the space can be transformed and fed back into the brain as electrical stimulations.

“This is quite different to instruments like (Eurorack biofeedback sensor) Instruo Scion, because this is not just a one way system,” he explained. “We are able to influence the development and behaviour of the 'brains' by stimulating the electrodes. This creates a 'closed loop' or bio-feedback system, where changes in the sonic landscape are picked up by microphones and converted into electrical simulations via the same electrodes we use to read activity in the organoids.”

Revivification is not a commercial product, so don’t expect to be able to grow your own mini brains any time soon. “However,” said Matt, “we are big believers in open source tools and communities, so once we've caught our breath after the exhibition, we'll be publishing more on the hardware and software we created for the project.” Much of the technology they are using is yet to be released to the public, so their research will also go back into the R&D at the research centers and companies that have supported them, including Open/Ephys and the Natural Medical and Sciences Institute.

In theory, however, the organoids can live indefinitely, and more can be grown from the stem cells required to start the project, so it’s possible that at some point in the future a part of you can continue to generate music long after you’ve shaken off this mortal coil.

A cheery thought… or is it?

Adam Douglas is a writer and musician based out of Japan. He has been writing about music production off and on for more than 20 years. In his free time (of which he has little) he can usually be found shopping for deals on vintage synths.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.