Stability AI's music generator can now edit and transform your samples and produce full-length, structured tracks

Now with the ability to process audio uploaded by the user, Stable Audio 2.0 is edging closer to becoming a revolutionary tool for music-makers

Last year, we reported on the launch of Stable Audio, a text-to-audio AI music generator from the makers of Stable Diffusion capable of producing musical clips of up to 90 seconds based on text prompts provided by the user.

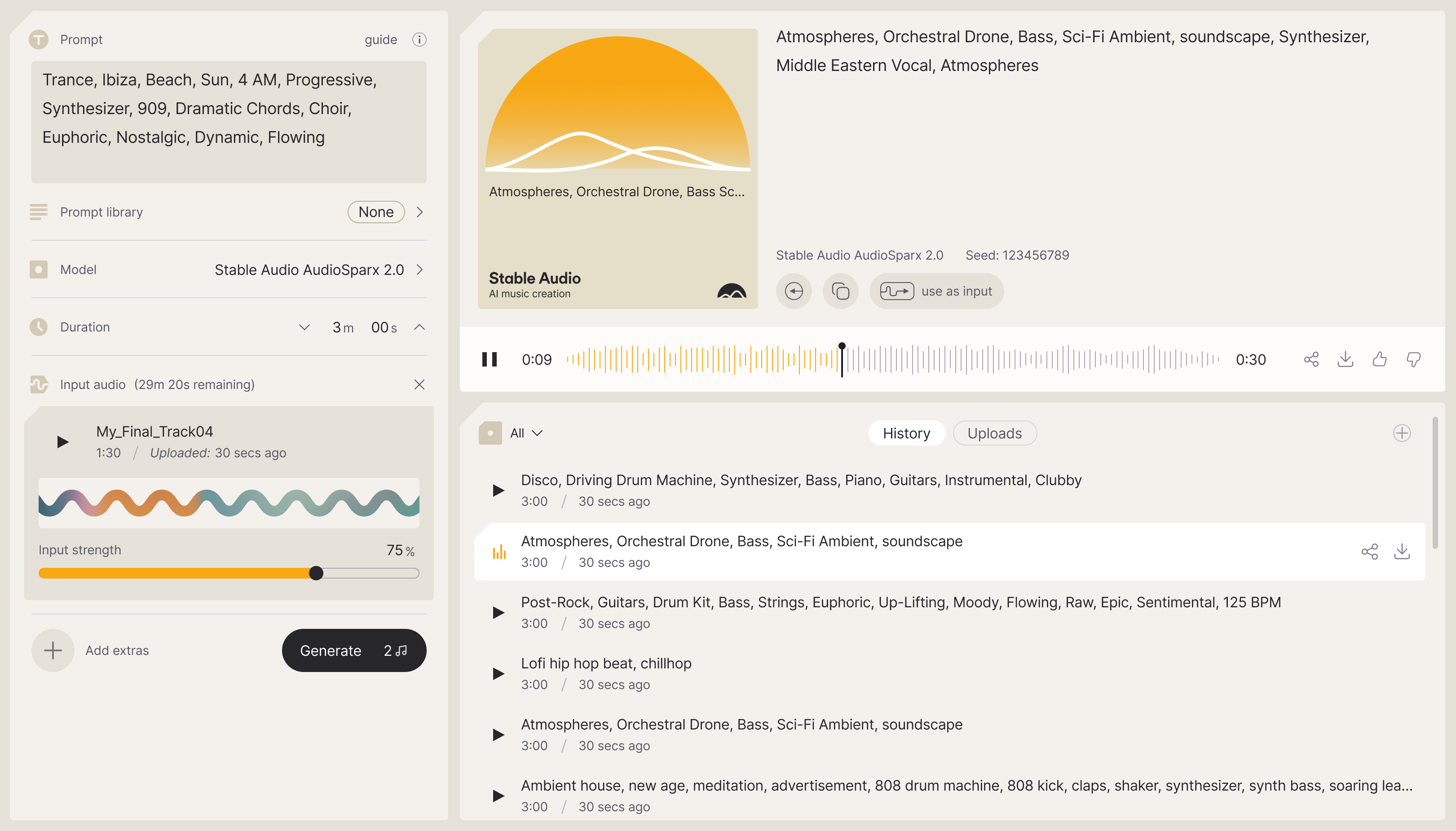

This week, Stability AI has launched Stable Audio 2.0, a substantial update to its AI-powered platform that brings the ability to generate "high-quality" and fully structured tracks of up to three minutes in length, in addition to melodies, backing tracks, stems and sound effects.

What's more interesting than the increase in track length and structural complexity, though, is that Stable Audio 2.0 can now process and transform audio uploaded by the user.

This can be used for all sorts of musical applications; in a demo shared on Stable Audio's YouTube channel, a synth bassline is transformed into the sound of a bass guitar playing the same melody, a snippet of scat singing is used to determine the pitch and rhythm of a drum part, and a beatboxing recording is morphed into a lo-fi hip-hop beat.

Stable Audio says that its audio-to-audio capabilities are intended to help its users "transform ideas into fully produced samples". While still in its early stages, the possibilities here are endless, and this new feature could become a hugely useful tool in a music production workflow - especially if it was integrated into a DAW or a plugin.

We asked Stability AI if this kind of integration was something they might explore in the future, and while they didn't comment specifically on any future projects, they said that "enhancing current production workflows by providing more granular control and editing capabilities" is a focus for the company.

Stable Audio 2.0's ability to generate sound and audio effects has also been improved, giving users the potential to add AI-generated imitations of real-world sounds to their creations.

Want all the hottest music and gear news, reviews, deals, features and more, direct to your inbox? Sign up here.

Like its first iteration, Stable Audio 2.0 was trained on a dataset of 800,000 audio files from the AudioSparx sound library. All of the artists behind the music in the dataset were given the option to 'opt out' and stop their music being used in the model training process.

In November last year, Stable Audio VP Ed Newton-Rex resigned from his post, describing the practices used in training the model as "exploitative".

"Companies worth billions of dollars are, without permission, training generative AI models on creators’ works, which are then being used to create new content that in many cases can compete with the original works," Newton-Rex said in a statement. "I don’t see how this can be acceptable in a society that has set up the economics of the creative arts such that creators rely on copyright."

While Stability AI has refused to provide us with comment on Ed's statement, they reiterated that the Stable Audio model is solely trained on data from their partners at AudioSparx. "All of AudioSparx's artists are compensated and were given the option to 'opt out' of the model training," they told us.

Generative AI-powered musical tools have come under fire in recent months as artists and record labels have begun to question where their unchecked development might lead us in the future. Just this week, a group of 200 artists that includes Billie Eilish and Stevie Wonder signed an open letter demanding that the "predatory use of AI" be curbed.

We asked Stability AI whether they believe that tools such as Stable Audio have the potential to impact the livelihoods of working musicians as they continue to advance in sophistication.

"Our mission at Stability AI is to build the foundation to amplify humanity’s potential, which includes artists," they replied. "Our aim is to expand the creative toolkit for artists and musicians with our cutting-edge technology, thereby enhancing their creativity."

Test out Stable Audio for free or listen to Stable Radio, a 24/7 stream of music created by Stable Audio, below.

I'm MusicRadar's Tech Editor, working across everything from product news and gear-focused features to artist interviews and tech tutorials. I love electronic music and I'm perpetually fascinated by the tools we use to make it.