Musiio CEO Hazel Savage on AI in music making: "It's hard to tell if it's AI, or just someone who isn't very good"

AI-powered tools can EQ your vocals and categorize your sample library, but can an AI write music like you? We're speaking to leading industry figures to find out

Want all the hottest music and gear news, reviews, deals, features and more, direct to your inbox? Sign up here.

You are now subscribed

Your newsletter sign-up was successful

Once the domain of sci-fi novels and blockbuster movies, artificial intelligence has gradually infiltrated almost every area of modern technology, while dramatically reshaping the role that these technologies play in our lives - and music tech is no exception.

From AI-powered plugins and intelligent stem separation to deepfake vocal simulations and neural networks that can ‘compose’ music to fit any brief, it might seem as if there’s nothing that these powerful technologies can’t do - and as giants like Apple and Facebook continue to snap up AI-based music generation services, it’s fair to assume that we’ll be seeing (and hearing) much more over the coming years.

Is there a limit to the capabilities of this technology? An AI might be able to suggest a great EQ setting for your vocal take, or intelligently categorize your sample library, but can it write a song that’ll replicate the authentic humanity and emotion of "real” music? And if it could - should we let it? We can’t tell you - but we know a few people who might. In this series of features, we’re speaking to leading figures working in music and AI to find out what exactly it can do, what it can’t, and where it’s going.

First up, we have Hazel Savage, CEO of Musiio, a service that uses AI and machine learning to scan, tag and categorize music. In the same way that your DAW’s BPM counter can tell you what tempo a track is at, Musiio’s technology can ‘listen’ to any song and tell you what instruments it features, what genre it’s in, and even what mood or emotional tone the song conveys.

Could you start off by telling us about what Musiio does?

“We describe ourselves as an artificial intelligence company for the music industry. But what we really are is a data processing company, it just so happens that we use AI to process the data, and the data we process is exclusively audio files.

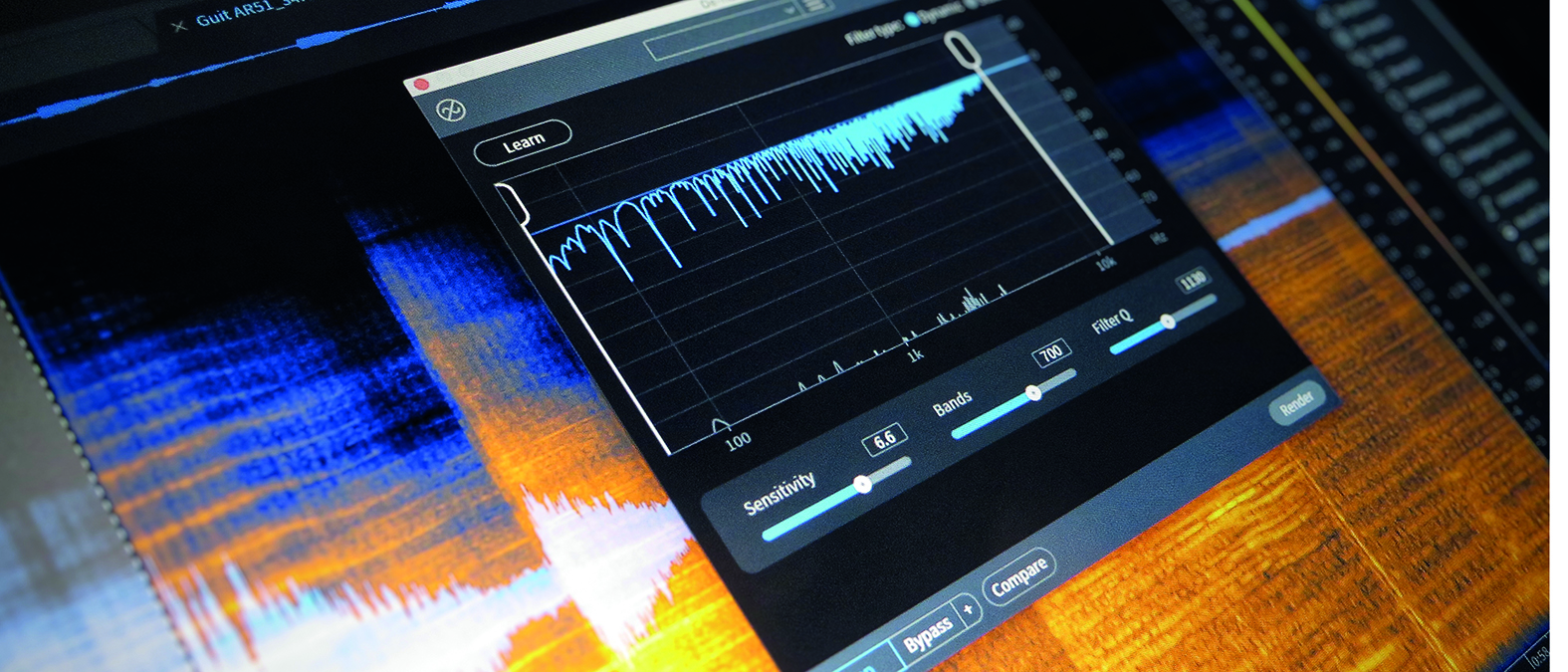

“So what we're really doing is we're actually taking MP3s or WAVs, any kind of digital audio file, and we're turning it into something a computer can read - a sort of a spectrographic transformation from which we're able to then identify a lot of features about music.

Want all the hottest music and gear news, reviews, deals, features and more, direct to your inbox? Sign up here.

I think it’s still five to ten years off being something that can really fool you into thinking that this is a talented artist

“So we’re turning the audio file into something more detailed than a waveform, and then training a computer on which features to look for. If we’re trying to identify whether a song has a vocal or not, you show an AI 5000 songs that have vocals, then you show an AI 5000 songs that have absolutely no vocal - instrumentals. And then you train the AI to see what those two different groups look like. Thanks to the neural networks, it’s learning pattern recognition and looking for features and how they differ, so essentially next time you show it something, it can say with 99% accuracy of which of those two buckets it falls into.

“We’re taking that idea and you extrapolate it bigger - we could train it to learn 100 genres, do we want to train it to learn 30 moods, for example. Whatever you want to teach it, you just need to be able to show it enough examples, and have an AI program that can learn it effectively. That’s what we do - we attach rich metadata to audio, and it’s all focused around this core fingerprinting technology.”

How does it work with more subjective qualities, like mood, for example, where the perception can differ from person to person?

“Mood is difficult. Many of our tags are factual - whether something has a vocal is a fact, the BPM of a song is not really up for debate. But the mood of a song often is up for debate, and even the genre is too sometimes. If you build up the datasets enough, you have a good cross-reference of examples. And often, 99% of people would agree that this set of songs is happy, or this set of songs is sad. That's not to say that there aren't some people who when they're sad, like to listen to happy music, and some people when they're happy love to listen to sad music.

I believe that AI should be doing either something that nobody wants to do, or something that wasn’t previously possible

“It’s definitely not flawless, but the alternative is you just don’t have this data, and you can’t build playlists based on mood. If you as a human being wanted to tag a song, and you took 10 minutes of your time, you could do it to your exact specifications, with your opinion on the genres and the moods and all of the subjective qualities. But the challenge is tagging 5 million tracks a day. Given the volumes that we have now in the music industry, AI is the only solution."

What kind of companies are using Musiio’s technology, and what are they using it for?

“Epidemic Sound uses our tagging, and Jamendo Music does too. Another great example is Beatstars. That’s where Lil Nas X bought the backing track for Old Town Road. Essentially they get, on average, about 20,000 songs uploaded a day. These are beats - producer-written backing tracks, sometimes full tracks, all going into this marketplace for people to search.

“Before they used Musiio, producers would just upload stuff, without any information - genre, BPM, key, whether it had a vocal or not. There was none of that data. So it was very difficult for customers to search and find what they were looking for. Now, as the music is uploaded, our tags are added, and that goes into their search algorithm to allow people to dig deeper into their catalogue. This creates more opportunities for the producers uploading the beats, and also the end user looking for music to work on.

“Hipgnosis are another client. Rather than using the musical fingerprint to come up with a bunch of tags that go with the song, they take the fingerprint of any given track, and search it against every other fingerprint in the catalogue. They’ve acquired about 60,000 songs in the past few years.

“The idea is, if somebody comes to their sync team and says, we were really hoping to get Adele’s Rolling In The Deep, but she’s not willing to license it - do you have any other pop, powerhouse female vocal tracks? If you’ve just acquired 60,000 songs, that’s hard to know off the top of your head. They have an interface with us where they can just drop in any track, and it’ll fingerprint that track in real time, compare it to everything else in the database, and offer an alternative. It’s like Google’s reverse image search, but with audio.”

Do you think Musiio’s technology could impact producers and musicians, people making music?

“When I tell people I have a music AI company, most people's first thought is, you must be using AI to write music. Because the same theory holds true - if you have a large enough number of songs, you could train an AI, show it what pop songs look like, and then ask it to reproduce something. There's definitely some controversy in that. If it's just basically an amalgamation of 5000 other songs, should the copyright go to the 5000 songs in the original data set?

There’s no Van Gogh living in a squat and cutting his ear off. There’s none of that human connection we love

“I don’t have an answer for that. I’m trying to differentiate between the AI that Musiio does, which is a system of descriptive AI, and the other side, the creative AI, using AI to replicate creativity. I'm relatively outspoken on not being a huge fan of AI for creativity. I actually did a project with the guys at Rolls Royce on ethical AI, around this exact subject. The reason why Musiio works so well, is because nobody wants to sit around and tag thousands of songs a day. It’s not fun work. There’s nothing creative about it.

“There doesn’t need to be a person doing it for it to be done well, or efficiently. So we’re not replacing cool jobs that humans do. I always think that AI should be doing either something that nobody wants to do, or something that wasn’t previously possible. And when it comes to tagging 5 million tracks a day, with all the will in the world, no human being could do that. I think we calculated it would take something like 83 years.

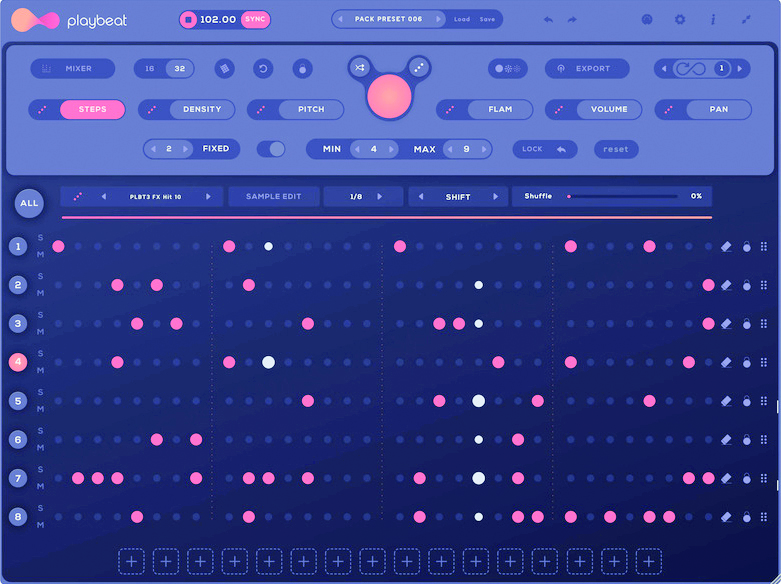

6 AI-powered plugins that could change the way you make music

“The issue with using AI to create music, is that people create music without needing a financial incentive. I got my first guitar when I was 13. Nobody paid me to learn the instrument, nobody paid me to practice. There’s no shortage of people who want to write music, and no shortage of drive for people to do it. But nobody’s sat in their bedroom, tagging a couple hundred songs a day just for the fun of it. So I really question whether we need creative AI. It’s fun academically, and it’s cool to see what it can do. But is it something humans need assistance with? I’m not sure I believe it is.

“I’m a huge Cardi B fan. Part of what I like about her, it’s not just her music, but it’s her videos, her merchandise, her Instagram stories. Even if an AI could replicate one of her tracks, that’s not the only thing about her that we like. I’m interested, I love to see what’s going on in the space, but my personal opinion is that AI writing music doesn’t bring a lot of value.”

Do you think AI will ever reach a level of sophistication where it can match up to authentic human expression?

“I don’t think it’s there, currently. Somebody played me a bunch of piano music that an AI had written, I can’t remember whose research it was. And at the minute I feel like it’s at the point where it’s hard to tell if it’s AI, or just someone who isn’t very good. I think it’s still five to ten years off being something that can really fool you into thinking that this is a talented artist. I think we’re a little way off from that.

They say music is the universal language, and you can teach an AI a language - but would you bother talking to a chatbot, when you could talk to a human?

“AI isn’t good at context - it doesn’t have much of a concept of start and stop. It can just go straight for an hour. And then as a human, you might be able to find three minutes within that that are okay. But it can’t start and stop itself. And the longer you leave it, the worse it gets, it just deteriorates over time.

“Visual media is much further along than music. It can certainly produce a picture that might fool you into thinking that a human created it. But I’m not sure why that’s valuable, other than a kind of parlour trick. There’s no human backstory to it - there’s an academic backstory, on how the researchers trained the model, and what datasets they used. But there’s no Van Gogh living in a squat and cutting his ear off. There’s none of that human connection we love.”

A lot of what we love about music is that it’s transmitting something from person to person, it’s a kind of communication - but does AI have anything to communicate?

“Exactly. They say music is the universal language, and we know that languages can be learned. So it makes sense that you could teach an AI a language - but would you bother talking to a chatbot, when you could talk to a human? It’s the same principle.”

I suppose the worry with this kind of tech is that somebody like Spotify gets hold of it and creates a whole library of AI-generated music that removes the need for any real musicians or producers.

“That is one of the challenges. They definitely have the technology already, as they have one of the biggest music research and development teams in this space. I think their original idea was that you could build an AI technology that would scan a song you’re uploading and tell you, if this was 30 seconds longer, you could get X amount of more views. Or if you switch this song into a minor key, you’ll see 25% more engagement.

“I think they probably have the data to do that. But I’m not sure it’s something they would want to put their name behind. There’s always what’s technically possible, and there’s what people want to be known for. I think what you were saying is a bigger problem - if you generate all that music and pad out all the playlists and get millions of plays, are you directly taking money from human artists that could have written that and been paid for it?”

I'm MusicRadar's Tech Editor, working across everything from product news and gear-focused features to artist interviews and tech tutorials. I love electronic music and I'm perpetually fascinated by the tools we use to make it.