Producer uses AI to make his vocals sound like Kanye West: "The results will blow your mind. Utterly incredible"

It's becoming possible for anyone to use an AI copy of any artist's vocal in their own music. Where could this lead, and how will we navigate the legal and ethical implications?

Want all the hottest music and gear news, reviews, deals, features and more, direct to your inbox? Sign up here.

You are now subscribed

Your newsletter sign-up was successful

Artificial intelligence is becoming an increasingly influential presence in the world of music technology. Recent years have seen AI integrated into a variety of plugins useful for everything from mixing to drum synthesis, while tools like Google's MusicLM and Riffusion are making huge advances in their ability to generate sounds (and entire tracks, almost) at the click of a button.

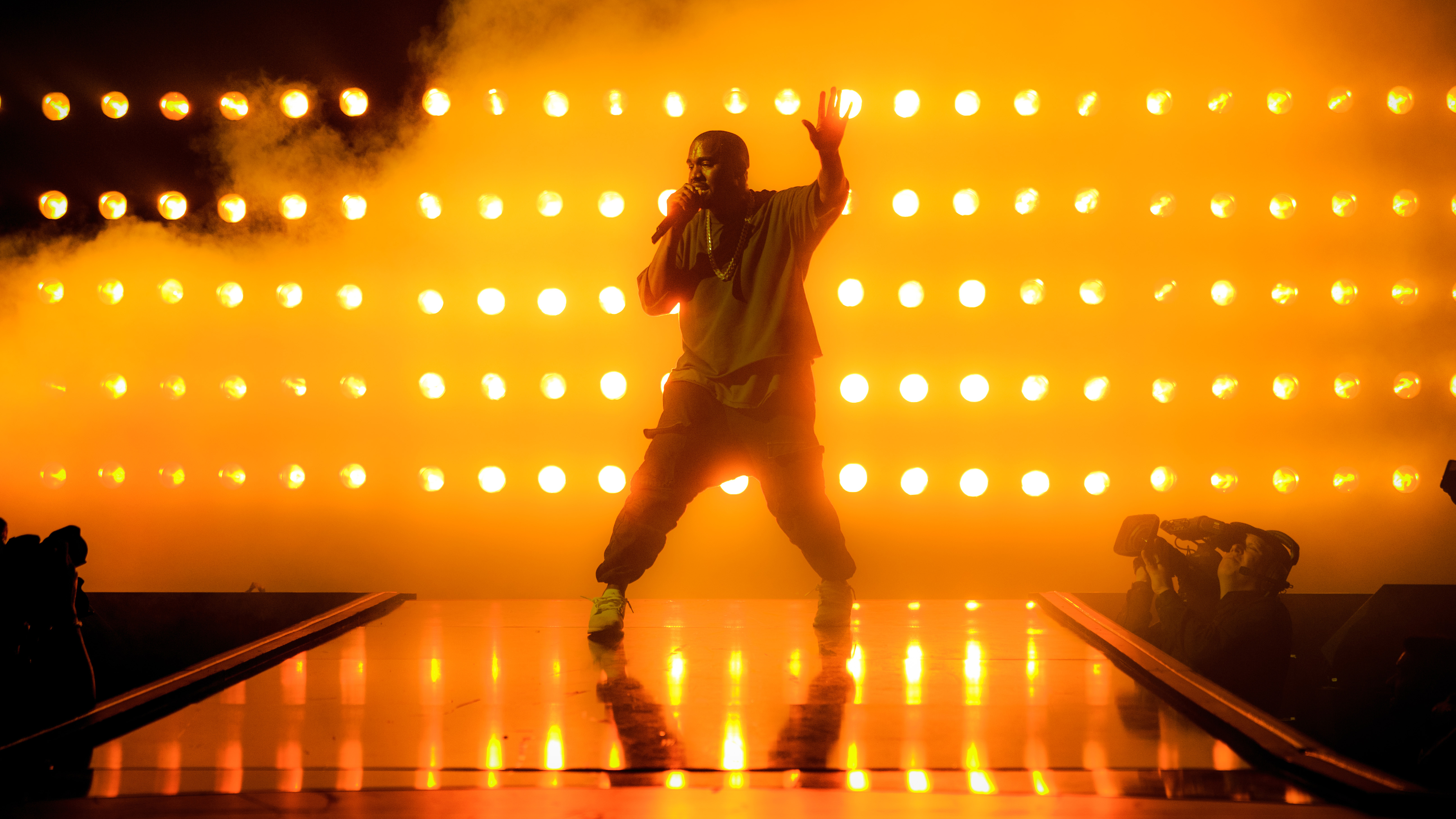

In yet another quantum leap forward for AI-powered music-making, producers are using AI to transform their vocals into the sound of another artist's voice. In a video posted to Twitter by entrepreneur and tech influencer Roberto Nickson, Nickson finds a Kanye-style beat on YouTube, writes eight bars and records them over the beat, before replacing his own voice with an AI-generated version of Kanye West's.

The results are impressively realistic. The majority of the verse sounds absolutely spot-on and could easily convince the average listener of its authenticity, and there are one only or two words that sound a little off, early on in the recording. It's worth noting, though, that the quality of the lyrics and delivery are inferior to Kanye's - two things AI can't successfully imitate, just yet.

Nickson also used the technology to create alternate versions of popular songs, producing versions of Justin Bieber's Love Yourself, Frank Ocean's Nights and Dr. Dre's Still D.R.E. that feature AI Kanye (or Kany-AI, as we've taken to calling him) on the vocals.

To transfer the timbre of Kanye's vocals to his own, Nickson followed a YouTube tutorial that demonstrates how to use Google Colab to access an existing AI model that's been trained on Kanye's voice. It's not a complex process, but a little inconvenient: when this type of technology becomes streamlined and integrated into the DAW, it's likely to have profound implications for the music industry.

"All you have to do is record reference vocals and replace it with a trained model of any musician you like," Nickson says, considering where this type of AI could lead us in the future. "Keep in mind, this is the worst that AI will ever be. In just a few years, every popular musician will have multiple trained models of them."

Though fascinating from a technical standpoint, the legal status of this kind of style transfer is unclear. The right of publicity, defined as "the rights for an individual to control the commercial use of their identity", is protected in a number of countries and would likely prohibit artists from using AI versions of another artist's vocals in commercially released music without authorization.

Want all the hottest music and gear news, reviews, deals, features and more, direct to your inbox? Sign up here.

Just a few months ago, rapper Yung Gravy was sued for violating Rick Astley's right of publicity after imitating his vocal style in the song Betty (Get Money). The lawsuit points to a 1988 case in which Ford Motor Co. was successfully sued for hiring an actor to impersonate Bette Midler's voice in a commercial.

In the replies to Nickson's Twitter thread, he rightly observes that "there will be a lot of regulatory and legal frameworks that will have to be rewritten around this. We will have to figure out how to protect artists."

Once this tech is brought into the DAW, we can envision a future where artists sell their own voice models to be used in AI-powered plugins by fans looking to recreate the voice in their own tracks. Perhaps this could be a new kind of merchandise, or even a way to collaborate remotely: a rapper or vocalist could feature on a thousand songs in a day without ever visiting a studio or uttering a single word.

Twitter user Chorus of the Forest asks whether this could be a moment of significance comparable to the advent of sampling in hip-hop. "Music was thought to be singing and playing instruments, until technology allowed you to make music out of other existing music", he continues. "That’s now happening again, but on an atomic scale. It’s about to be god mode activated for everyone."

As with almost every development in AI, we're faced with both creative possibilities and the potential for abuse. Once this technology is powerful enough to be totally convincing and easily accessible, the market could become flooded with so many AI imitations of voices that it becomes impossible to figure out what's real and what's fake.

"Things are going to move very fast over the next few years," Nickson comments. "You're going to be listening to songs by your favourite artists that are completely indistinguishable, you're not going to know whether it's them or not."

"The possibilities are endless, but so are the dangers," Nickson continues in a separate tweet. "These conversations need to be happening at every level of society, to ensure that this technology is deployed ethically and safely, to benefit all of humanity."

Nickson isn't the first to apply this kind of AI-powered technique to vocals: in a TED Talk shared last year, musician, producer and academic Holly Herndon demonstrated another artist singing through an AI model trained on her own voice in real-time.

Revisit our feature on AI and beatmaking.

And just like that. The music industry is forever changed.I recorded a verse, and had a trained AI model of Kanye replace my vocals.The results will blow your mind. Utterly incredible. pic.twitter.com/wY1pn9RGWxMarch 26, 2023

I'm MusicRadar's Tech Editor, working across everything from product news and gear-focused features to artist interviews and tech tutorials. I love electronic music and I'm perpetually fascinated by the tools we use to make it.